Cluster Computing Definition

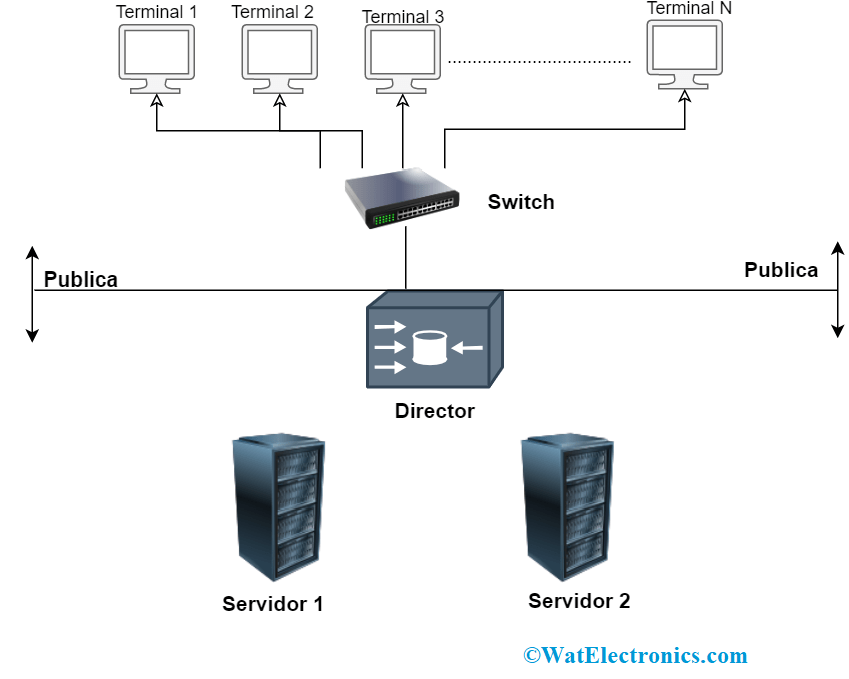

1 In a computer system a cluster is a group of servers and other resources that act like a single system and enable high availability and in some cases load balancing and parallel processing. Cluster computing is the technique of linking two or more computers into a network usually through a local area network in order to take advantage of the parallel processing power of those computers.

An Overview Of Cluster Computing Geeksforgeeks

1 a group of sectors in a storage device or 2 a group of connected computers.

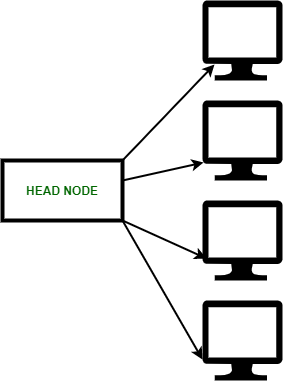

Cluster computing definition. In the most basic form Cluster computing depicts a system that consists of two or more computers or systems often known as nodes. In computing a cluster may refer to two different things. It is mainly defined as the technique of linking between two or more computers into a local area network.

A computer cluster provides much faster processing speed larger storage capacity better data integrity superior reliability and wider availability of resources. Each device in the cluster is called a node. Cluster is a term meaning independent computers combined into a unified system through software and networking.

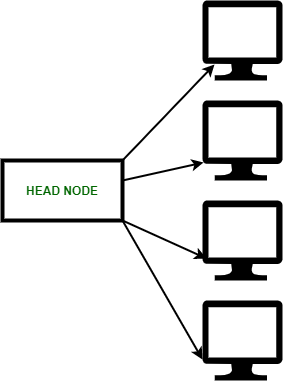

2 Definition and characteristics of a cluster. Cluster computing is a form of computing in which a group of computers are linked together so that they can act as a single entity. A grid is connected by parallel nodes that form a computer cluster which runs on an operating system Linux or free software.

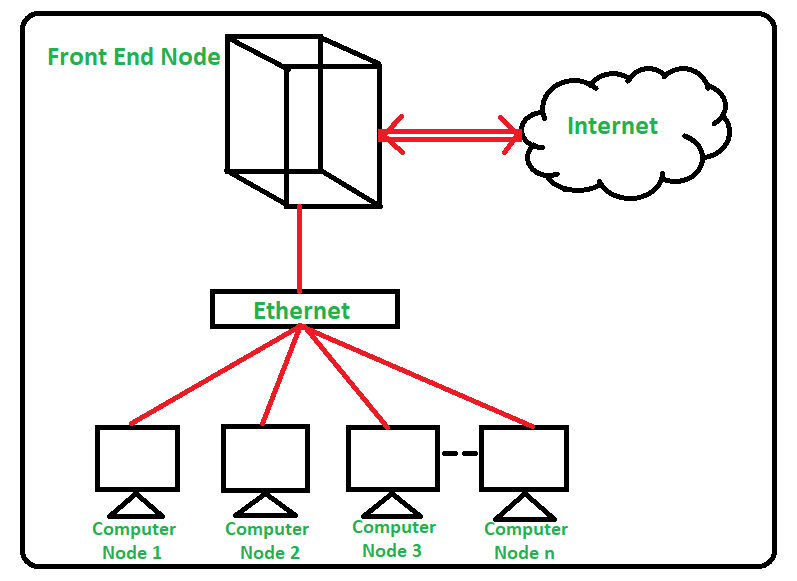

A cluster in the context of servers is a group of computers that are connected with each other and operate closely to act as a single computer. Increase availability Facilitating the scalability. Cluster computing is a powerful computing paradigm for addressing high workloads and deploying specific applications but how about if we applied it in Edge Computing.

Clusters are typically used for High Availability for greater reliability or High Performance Computing to provide greater computational power than a single computer can provide. The technology is applied to a wide range of applications such as mathematical scientific or educational tasks through several computing resources. This allows workloads consisting of a high number of individual parallelizable tasks to be distributed among the nodes in the cluster.

Defining Cluster Computing. Therefore cluster computing is a homogenous network. A computer cluster is a single logical unit consisting of multiple computers that are linked through a LAN.

Each computer connected to the network is called a node. Architectures Operating Systems Parallel Processing Programming Languages The use of computers within our society has developed from the very first usage in 1945 when the modern computer era began until about 1985 when computers were large and expensive. Cluster computing is a rapidly maturing technology that seems certain to.

At a high level a computer cluster is a group of two or more computers or nodes that run in parallel to achieve a common goal. The cluster can vary in size from a small work station to several networks. Were talking about clustering server cluster or farm Computing Technologies for designer consolidate multiple independent computers called nodes to enable management comprehensive and go beyond the limitations of a computer to.

An eternal struggle in any IT department is in finding a method to squeeze the maximum processing power out of a limited budget. Join me in this article today as we dive into the basics behind cluster computing on the edge its benefits and how you can utilise it in your various projects. In cluster computing two or more computers work together to solve a problem.

Cluster Computing offers solutions for solving severe problems through faster computing speed and higher data integrity. Each node has the same hardware and the same operating system. These nodes work together for executing applications and performing other tasks.

The cluster devices are connected via a fast Local Area Network LAN. The networked computers essentially act as a single much more powerful machine. Cluster computing or High-Performance computing frameworks is a form of computing in which bunch of computers often called nodes that are connected through a LAN local area network so that they behave like a single machine.

Definition of cluster computing- It is the journal of networks and applications which is parallel processing distributed computing. Cluster computing refers to many computers that connect to a network and work as a unit. Speedy local area networks enhance a cluster of computers abilities to operate at an exceptionally rapid pace.

There are a number of reasons for people to use cluster computers for computing tasks ranging from an inability to afford a single computer with the computing capability of a cluster to a desire to ensure that a computing system is always available.

Difference Between Cloud Computing And Cluster Computing Geeksforgeeks

Difference Between Distributed And Cluster What Is A Cloud Computing Platform Distributed Application Scenarios By Mina Ayoub Medium

Cluster Computing Definition Types Advantages Applications

Posting Komentar untuk "Cluster Computing Definition"