Sklearn.datasets.make_classification N_clusters Per Class

The dataset contains 4 classes with 10 features and the number of samples is 10000. The color of each point represents its class label.

Scikit Learn Test Samples Generator Py At Main Scikit Learn Scikit Learn Github

Should the samples of one class be together.

Sklearn.datasets.make_classification n_clusters per class. For meaning of the parameters you can follow the documentation. X y make_classificationn_samples100 n_features2 n_redundant0 n_informative2 n_clusters_per_class1 random_state1 Change the first sample to a new class y0 2 clf LogisticRegressionrandom_state1 cv StratifiedKFoldn_splits2 random_state1 train test listcvsplitX y yhat_proba cross_val_predictclf X y cvcv. Make_classification n_samples100 n_features20 n_informative2 n_redundant2 n_repeated0 n_classes2 n_clusters_per_class2 weightsNone flip_y001 class_sep10 hypercubeTrue shift00 scale10 shuffleTrue random_stateNone 源代码.

Here well extract 15 percent of it as test data. Scatter X y 0 0 X y 0 1 color tabred edgecolors k s 120 alpha 09 plt. Note the below rule should be followed.

For easy visualization all datasets have 2 features plotted on the x and y axis. In scikit-learn this classifier is named BaggingClassifier. Here well extract 15 percent of it as test data.

From sklearndatasets import make_classification import pandas as pd Generate synthetic data and labels n_samples. Sklearndatasetsmake_classification n_samples100 n_features20 n_informative2 n_redundant2 n_repeated0 n_classes2 n_clusters_per_class2 weightsNone flip_y001 class_sep10 hypercubeTrue shift00 scale10 shuffleTrue random_stateNone source Generate a random n-class classification problem. 3 x 1 2 1 The above is wrong eqn doesnt satisfy.

Make_classification def make_classification n_samples 100 n_features 20 n_informative 2 n_redundant 2 n_repeated 0 n_classes 2 n_clusters_per_class 2 weights None flip_y 001 class_sep 10 hypercube True shift 00 scale 10 shuffle True random_state None. Print __doc__ import pylab as pl from sklearndatasets import make_classification pl. Imbalanced Classification Dataset.

Scatter X1 0 X1 1 marker o. From sklearndatasets import make_classification X y make_classification n_samples1000 n_features2 n_informative2 n_classes2 n_clusters_per_class1 random_state0 What formula is used to come up with the ys from. Show activity on this post.

The number of clusters per class. The color of each point represents its class label. X y make_classification n_samples 100 n_features 2 n_informative 2 n_redundant 0 n_repeated 0 n_classes 2 n_clusters_per_class 2 class_sep 40 shuffle False random_state 12346 plt.

The proportions of samples assigned to each class. If None then classes are balanced. This example illustrates the datasetsmake_classification datasetsmake_blobs and datasetsmake_gaussian_quantiles functions.

The final 2 plots use make_blobs and make_gaussian_quantiles. This initially creates clusters of points normally distributed std1 about vertices of an n_informative -dimensional hypercube. 100 seems like a good manageable amount n_features.

For make_classification three binary and two multi-class classification datasets are generated with different numbers of informative features and clusters per. The final 2 plots use funcsklearndatasetsmake_blobs and funcsklearndatasetsmake_gaussian_quantiles. N_classes n_clusters_per_class 2 n_informative As per post eqn becomes.

Note that if lenweights n_classes-1 then the last class weight is automatically inferred. Generate a random n-class classification problem. Classifier including inner balancing samplers.

Plot several randomly generated 2D classification datasets. Return make_classificationn_samplesn_samples n_features2 n_informative2 n_redundant0 n. Sklearndatasetsmake_classification Scikitlearn 101.

It using the make_classification from scikit-learn but fixing some parameters. Number of classesclusters n_features. Examples using sklearndatasetsmake_classification sklearndatasets make_classification sklearndatasets.

Def create_datasetn_samples1000 weights001 001 098 n_classes3 class_sep08 n_clusters1. 1 hours ago sklearndatasets. We will generate 10000 examples with an approximate 1100.

3 3 is a good small number n_informative. The following function will be used to create toy dataset. Datasets import make_classification from matplotlib import pyplot from numpy import where define dataset X y make_classification n_samples 10000 n_features 2 n_redundant 0 n_clusters_per_class 1 weights 0999 flip_y 0 random_state 4.

So one option is to increase n_informative to 2 and then it will satisfy. Scatter X y 1 0 X y 1 1 color tabblue edgecolors k s. 1 from what I understood this is the covariance in other words the noise.

The dataset contains 3 classes with 10 features and the number of samples is 5000. The fraction of samples whose class is assigned randomly. Weights array-like of shape n_classes or n_classes - 1 defaultNone.

In sklearndatasetsmake_classification how is the class y calculated. Ensemble of samplers Version 090dev0. Plot randomly generated classification dataset.

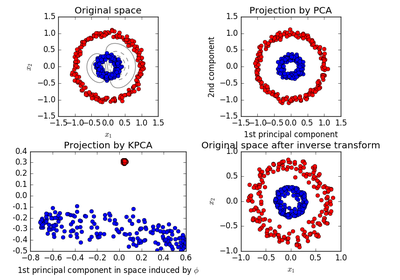

The first 4 plots use the make_classification with different numbers of informative features clusters per class and classes. Number of samples in the data n_classes. Figure figsize 8 8 plt.

Ensemble of samplers. Figure figsize 8 6 pl. X y make_classification n_samples 5000 n_features 10 n_classes 3 n_clusters_per_class 1 Then well split the data into train and test parts.

Import sklearndatasets as d Python a dmake_classification n_samples100 n_features3 n_informative1 n_redundant1 n_clusters_per_class1 print a n_samples. Number of features for each sample shuffle. Before we dive into the modification of SVM for imbalanced classification lets first define an imbalanced classification dataset.

The first 4 plots use the funcsklearndatasetsmake_classification with different numbers of informative features clusters per class and classes. We can use the make_classification function to define a synthetic imbalanced two-class classification dataset.

Generate and plot a synthetic imbalanced classification dataset from collections import Counter from sklearn. X y make_classification n_samples10000 n_features10 n_classes4 n_clusters_per_class1 Then well split the data into train and test parts. Lets say I run his.

In ensemble classifiers bagging methods build several estimators on different randomly selected subset of data. Title One informative feature one cluster fontsize small X1 Y1 make_classification n_features 2 n_redundant 0 n_informative 1 n_clusters_per_class 1 pl.

Release History Scikit Learn 0 18 2 Documentation

Posting Komentar untuk "Sklearn.datasets.make_classification N_clusters Per Class"