Kmeans(n_clusters=1)

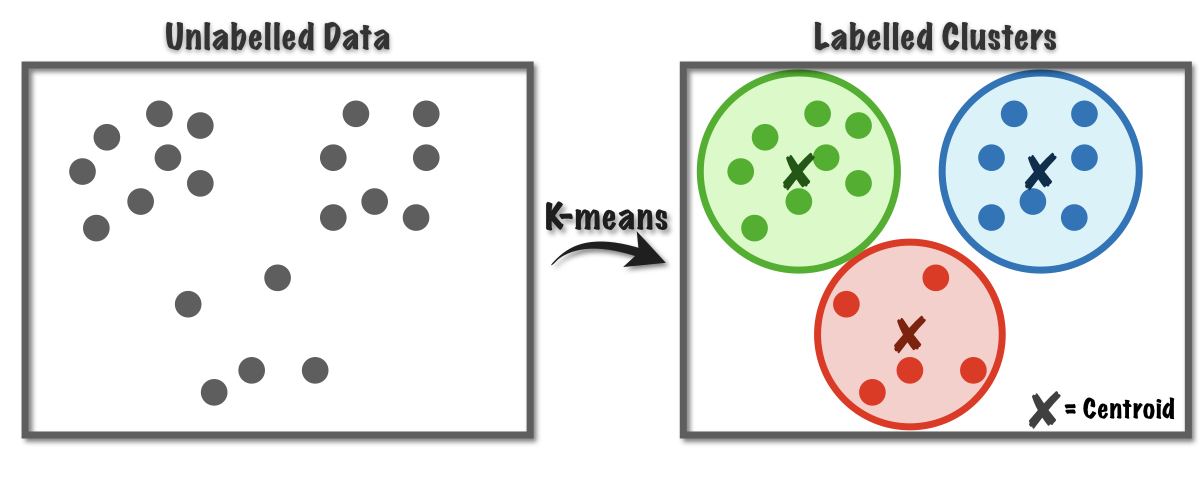

The k -means algorithm searches for a pre-determined number of clusters within an unlabeled multidimensional dataset. Suppose we have x1 x2 xn samples in m dimensions then k-means clustering divides the n samples into k clusters such that the total within-cluster sum of squares is minimized k-means clustering finds the optimal number of clusters k while minimizing the clustering criterion function J.

K Means Clustering Model In 6 Steps With Python By Samet Girgin Pursuitdata Medium

Read more in the User Guide.

Kmeans(n_clusters=1). K-means tends to favor round clusters perhaps thats why the middle section is being dividing in half rather than producing a large oval cluster. Fitdata bench_k_meansKMeansinit pca. Components_ n_clusters n_digits n_init 1 name PCA-based data data print 79 _.

Kmeans KMeansn_clusters i init k-means max_iter 300 n_init 10 random_state 0 kmeansfitx wcssappendkmeansinertia_ For implementing K-Means clustering we use KMeans and call fit to. How can we fix this error. The cluster center is the arithmetic mean of all the points belonging to the cluster.

GoalThis post aims to introduce k-means clustering using artificial data. This is the most important parameter for k-means. K-means method produces flat cluster structure ie.

Title ELBOW METHOD. I am trying it with KMeans clustering as the following. Read more in the User Guide.

Setting this to k-means employs an advanced trick to speed up convergence which youll use later. Each k cluster contains at least one data point. My current goal is to cluster the images into a number of categories so that I can later assess if the taste of foods depicted in images of the same cluster is similar.

Kmeans KMeans n_clustersi init k-means max_iter300 n_init10random_state0 i above is between 1-10 numbers. Kmeans KMeans n_clusters i init k-means random_state 42 kmeansfit X wcssappend kmeansinertia_ init argument is. Now we are using the Elbow method to find the optimal K value.

From sklearncluster import KMeans for i in range1 11. N_samples1 should be n_clusters3. Kmeans KMeansn_clusters i init k-means random_state 101 kmeansfitX elbowappendkmeansinertia_ import seaborn as sns snslineplotrange 1 20 elbow color blue pltrcParamsupdate figurefigsize 10 75 figuredpi.

N_init sets the number of initializations to perform. From sklearncluster import KMeans elbow for i in range1 20. K_means KMeansn_clustersk model k_meansfitX sum_of_squared_distancesappendk_meansinertia_.

Applying k-means for diffrent value of k and storing the WCSS from sklearncluster import KMeans wcss for i in range1 11. KMeans n_clusters 8 init k-means n_init 10 max_iter 300 tol 00001 verbose 0 random_state None copy_x True algorithm auto source K-Means clustering. In a later post Ill be revisiting this dataset for further analysis to see what additional insights can be gain by using different algorithms and increasing the dimensionality.

The standard version of the k-means algorithm is implemented by setting init to random. From sklearncluster import KMeans import numpy as np import pandas as pd import. KMeansn_clusters8 initk-means n_init10 max_iter300 tol00001 precompute_distancesauto verbose0 random_stateNone copy_xTrue n_jobs1 algorithmautosource.

In this case the seeding of the centers is deterministic hence we run the kmeans algorithm only once with n_init1 pca PCAn_components n_digits. It accomplishes this using a simple conception of what the optimal clustering looks like. Kmeans KMeansn_clusters i init random max_iter 300 n_init 10 random_state 0 kmeansfitx_scaled wcssappendkmeansinertia_ For plotting against the number of clusters.

I noticed that clusterKMeans gives a slightly different result depending on if n_jobs1 or n_jobs1. Parameters n_clusters int default8. Init parameter is the random initialization method.

This is important because two runs can converge on different cluster. From sklearncluster import KMeans wcss for i in range 1 11. To do that I load the images and process them in a format that can be fed into the VGG16 for feature extraction and then pass the features to my KMeans to cluster the images.

For each value of k we can initialise k_means and use inertia to identify the sum of squared distances of samples to the nearest cluster centre sum_of_squared_distances K range115 for k in K. From sklearncluster import KMeans k_means KMeansn_clusters3 random_state0 k_meansfitquotient But this is giving me an error. N_clusters sets k for the clustering step.

Below is the code I used to run the same KMeans clustering on a varying number of jobs.

Kmeans Clustering Value Error N Samples 1 Should Be N Cluster Stack Overflow

Understanding K Means Clustering In Machine Learning By Dr Michael J Garbade Towards Data Science

K Means Clustering Using Python Welcome Back Guys Hope You Had A Great By Luigi Fiori Medium

Posting Komentar untuk "Kmeans(n_clusters=1)"